Feature spotlight: Universal instrument integration with Ganymede’s Agents

Need to move data from lab instruments to the cloud? We've got just the right out-of-the-box toolkit for you.

The digital "dry" lab may be all in on the cloud, but wet labs will almost always continue as entirely on-premise—AKA “on-prem”—operations. From flow cytometers to bioreactors to HPLCs and beyond, the standard biotech lab has dozens, or in some cases hundreds, of instruments running experiments.

Right now, getting data from those machines into a centrally stored database in the cloud—or even further downstream into a system of record like an electronic lab notebook (ELN) or laboratory information management system (LIMS)—often requires a series of highly manual processes cobbled together across teams.

At smaller labs, it’s not uncommon for a scientist to literally have to plug in a USB stick, download files, walk over to a PC, plug the USB in again, manually move the right files over to a shared drive, and then move them again to and from their local laptop. Not only is this time-consuming, but even worse, scientists are also at risk of misplacing the USB.

Larger organizations tend to have expanded IT, software engineering, and even data science teams that take a more sophisticated approach to instrument data handling. That’s not to say it makes instrument data integration any easier on the whole—it just passes the problems of solutions engineering from one team (scientists) to another (software engineers).

Consider how tedious it is to build and maintain end-to-end instrument integration using tools from a basic cloud provider like AWS:

The infrastructure tends to be purely functional and its implementation non-collaborative and disconnected from the evolving needs and wants of the scientific teams. The data itself often:

- lacks contextual metadata;

- gets stored in incompatible raw formats;

- or, at best, ends up stashed in semi-structured formats within an ELN or LIMS.

What if, instead, there was an automatic way to get data from on-prem instruments and other data sources into the cloud? What if we took it one step further and automatically structured that data into an accessible and interoperable format?

The good news is that there is a way to achieve this.

Today, we’re spotlighting one of Ganymede’s software features that helps with this very issue: Agents.

Our Agents are software connectors for lab instruments that serve as a data capture tool for labs–one that fits seamlessly into existing lab workflows, without creating any additional work for scientists. Once installed either on an instrument or local PC attached, the tool listens 24/7 for data, transfers that data to the cloud, and even tees it up for analysis and downstream integration into ELNs, LIMS, etc. We like to think of these as “set and forget” connections: once they’re installed, they run automatically in the background and can even be updated remotely, via cloud-managed logic that then can be deployed to the Agents.

We’ve already seen Ganymede’s clients realize huge benefits with this feature. Our customers:

- Capture experimental results as soon as they are created and process data right away

- Take manual data entry off scientists’ plates, saving them critical time and instead enabling them to dedicate their energy to experiments and analysis

- Decrease the time it takes to hand off data between capture and analysis

- And more.

Curious how Agents work and what they can do for your lab?

Read on to learn more about five of the top benefits and features.

1. Create Instrument Connections Quickly With Configuration Templates

Our Agents come with several configurations right out of the box for the most common needs. When we designed this product, we saw users had three key use cases:

- To connect/integrate with existing original equipment manufacturer (OEM) instrument software

- To collect information on a predetermined schedule

- To monitor a local file system for the presence of new information

Our Agent configurations cover all three of these common needs, as well as a few other secondary use cases.

Take, for example, running a bulk upload/backfill of files from the local PC. To get started fast, users can simply select a configuration, customize (if needed) to the level they want, and install the connection. There’s no need to wait for a contractor or someone else within the lab to build something from scratch.

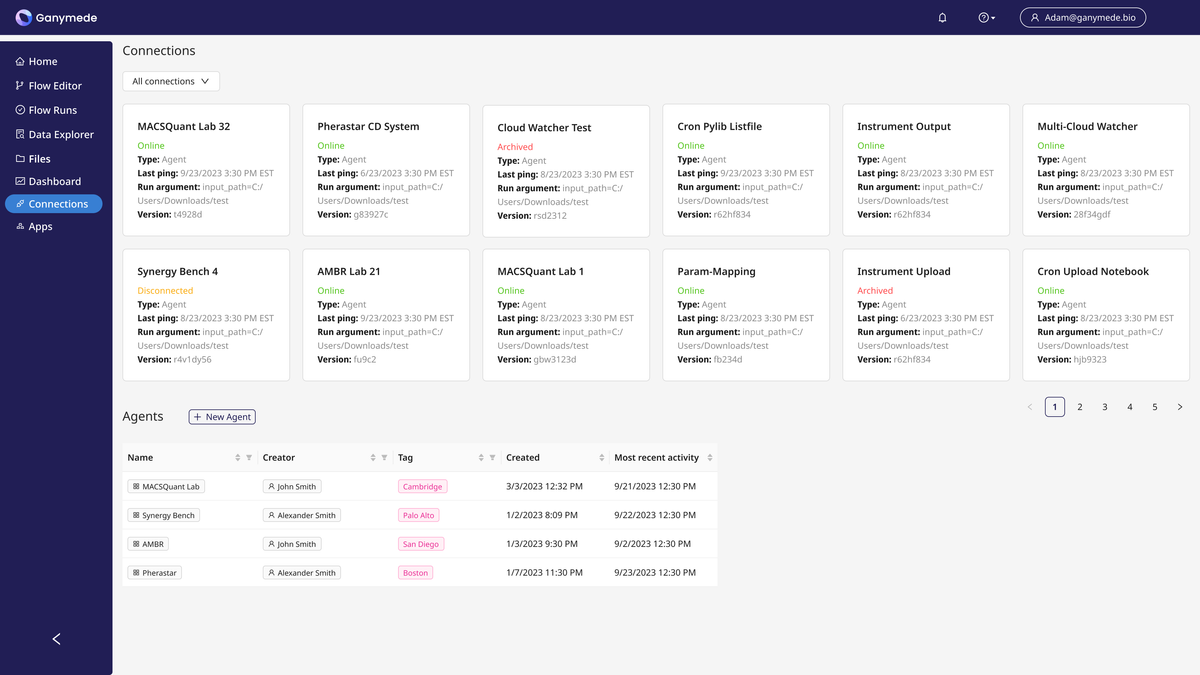

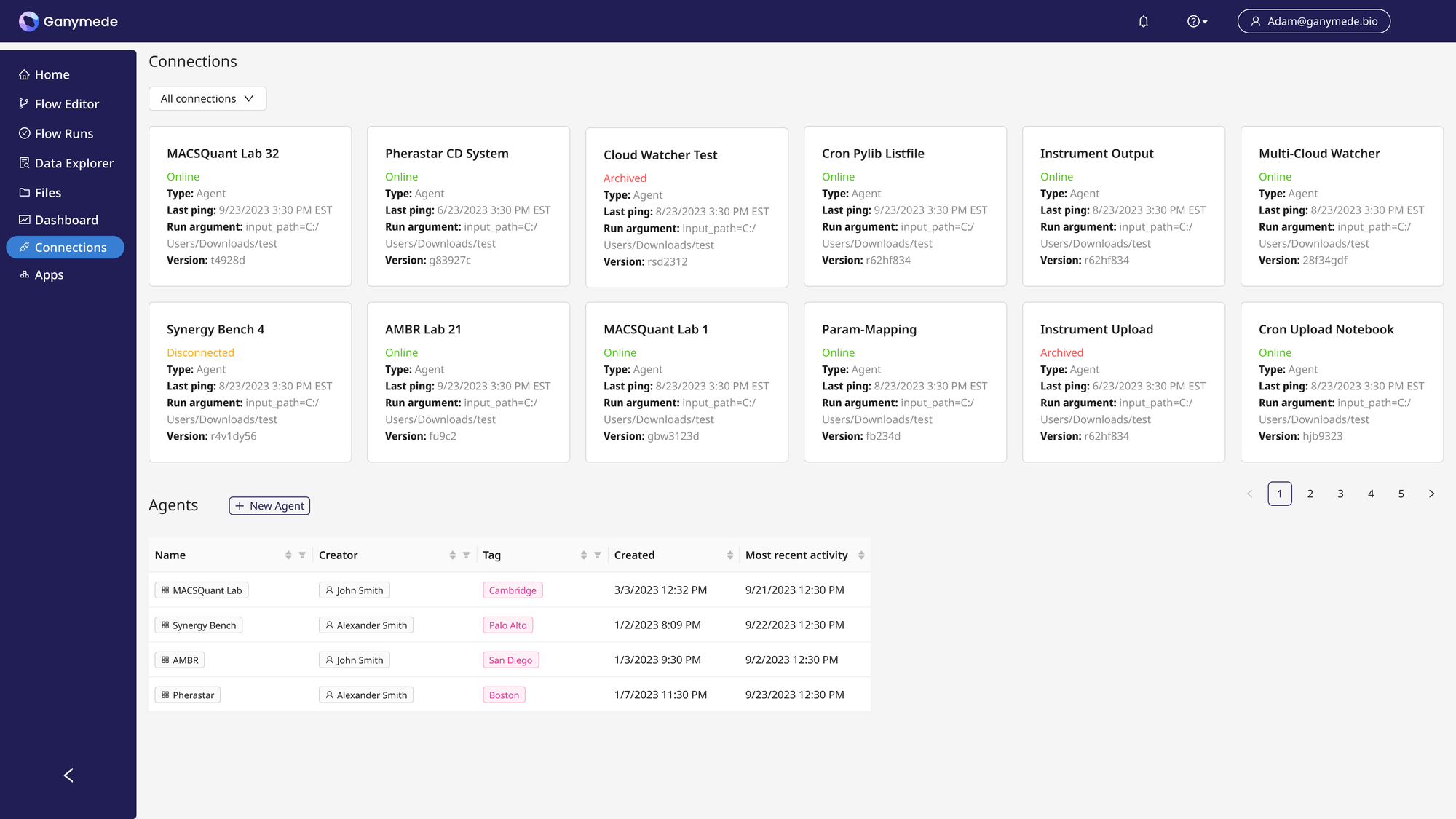

Creating new instrument connections

TL;DR: With out-of-the-box configuration templates for popular use cases, you can quickly and easily build and run a Universal Connector without waiting for help.

2. Customize As Much (Or As Little) As You Want

When it comes to code, we know that software engineers and developers want the option to customize and get as deep in the weeds as possible—or not at all. That’s why Ganymede’s scientific data cloud is designed to be code-flexible, running on our Lab-as-Code technology.

Our Agents tool is no different.

Configure the Agent and Connection code as much or as little as you like. Create the custom data flows and data collection batches needed to automate your lab’s unique scientific experiments. For example, Agents can serve as a data aggregator, collecting multiple files before running downstream processing. That means if an experiment requires data from, say, three different files, our Agents can be customized to wait for all three files, aggregate them, and upload them to your Ganymede Cloud for downstream processing.

This sort of flexibility can have big benefits to labs, especially when it comes to setting up new assays. With an Agent in place, getting an assay into production goes much, much quicker.

Easily customize each Agent's code with our integrated development environment (IDE).

TL;DR: Plug in as much custom business logic as you like to decrease time to assay readiness.

3. Put Time Back Into Developers’ Hands

Developers and data engineers in the life sciences care about the impact their code has. Every bit and byte contributes to the discovery of new drugs that could change—or even save—human lives. That’s why it’s such a shame when they spend hours on monotonous tasks: like reconciling code changes after updating a config.

Ganymede takes a lot of the busywork away. Whenever there’s a change to an Agent, our tool automatically builds an executable file—Windows or a Linux binary—which is then automatically served to the developer for first-time installation. Existing Connections can receive those updates remotely, without interrupting an in-progress experiment or needing to get the scientists involved.

Easily download and implement Agents as executable files for first-time instrument installations.

TL;DR: Let us handle CI/CD. Spend time writing code that supports scientists, rather than building tools to deliver software updates or maintaining basic infrastructure.

4. Install Once, Use Forever

Nothing’s worse than deploying software that becomes a maintenance nightmare. No one wants to spend their days hunting down and debugging hundreds of software programs. With Agents, you don’t have to.

Once an Agent is installed, you have the option to automatically deploy future changes to every instance. That means that if an instrument manufacturer updates their software and the Agent code must change as a result, those code changes are deployed to all active connections of that Agent type. In other words: you update your Agent in one place, and it deploys everywhere.

There’s no need to physically update anything with a USB or to interrupt experiments in progress with slow software updates. Scientists save at least 20% of their time by avoiding distracting maintenance and research delays.

Automatically update the Agent settings with remote deployment.

TL;DR: Save time by pushing changes across instrument PCs quickly, all without interrupting live experiments.

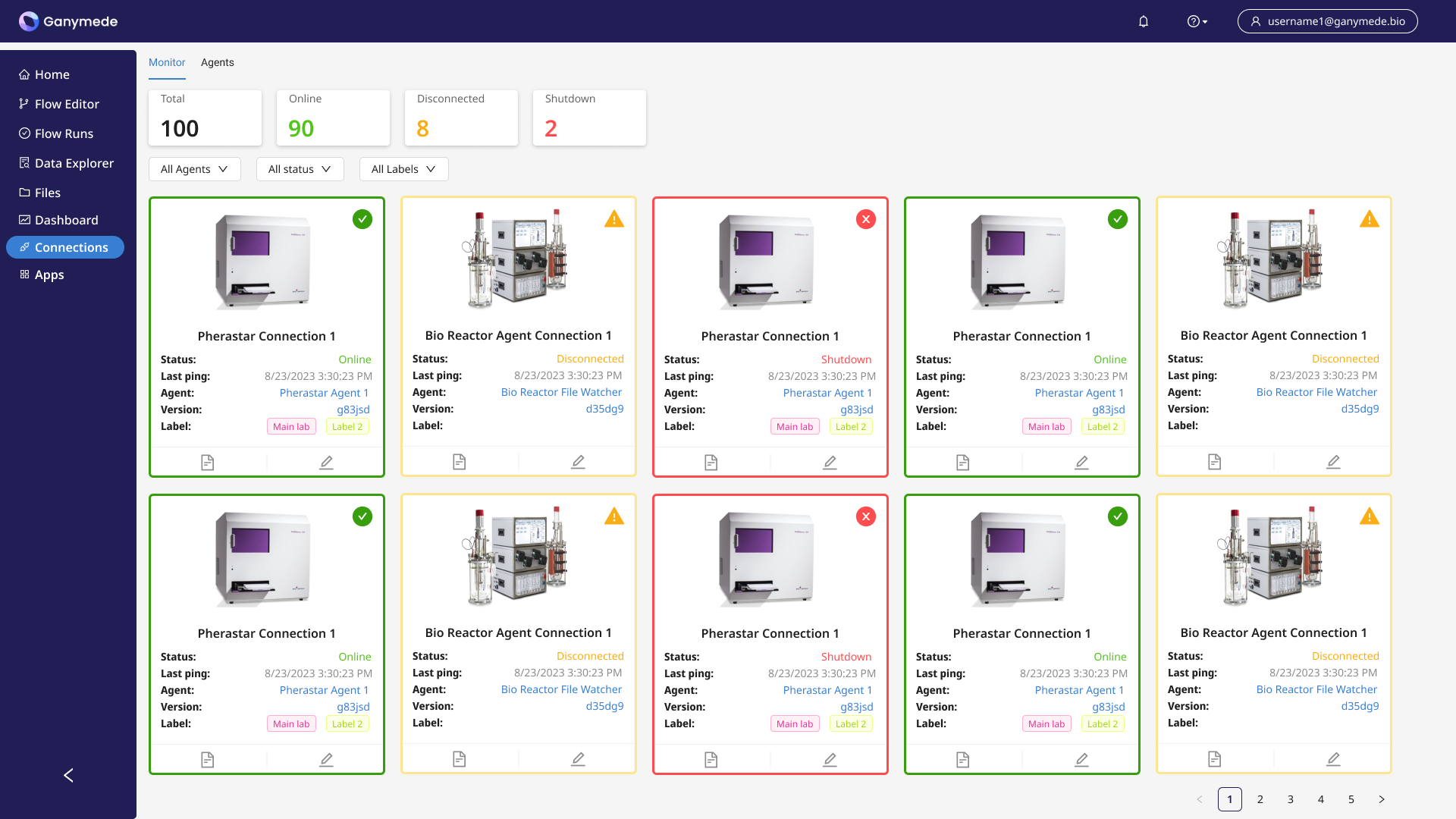

5. Monitor Connections Remotely

Labs can have dozens of Connections, touching every instrument in the room. You may be wondering - what happens if something goes wrong? It could be disastrous to find out hours, days, or even weeks after the fact that an instrument’s data isn’t automatically uploading to the cloud.

Ganymede has solved this problem by building an accessible and intuitive dashboard to monitor connections in real time. See data activities from across your entire lab and quickly spot which connections may need debugging. If there’s an issue, you’ll be able to spot it quickly—and get that data flowing again ASAP.

TL;DR: 24/7 dashboard monitoring means you can track data activities in the lab, and troubleshoot issues that prevent data capture.

Agents: The Bridge Between On-Prem Data Generation and Cloud-Native Data Storage

As labs embrace digitalization, modern biotechnologies, and automation, they create more and more data. All too often, people think of acquiring that data as just recording everything from every endpoint. But that’s not enough. Data collection alone won’t empower a lab to process it.

Actually garnering insights and deriving value from data requires not just collecting but also storing and processing information in a way that allows for analysis.

Ganymede’s Agents tool shifts the idea of data collection from acquiring information to moving that information downstream. We automate as much as possible, saving time, increasing efficiency, removing human bottlenecks, and moving data towards its final destination and purpose.

Learn More About Our Agents: View The On-Demand Webinar Now

Highlights

- Presentation begins [2:15]

- What are scientific data clouds and why they're the next evolution in lab data management [5:05]

- Introducing the digitalization maturity curve [8:40]

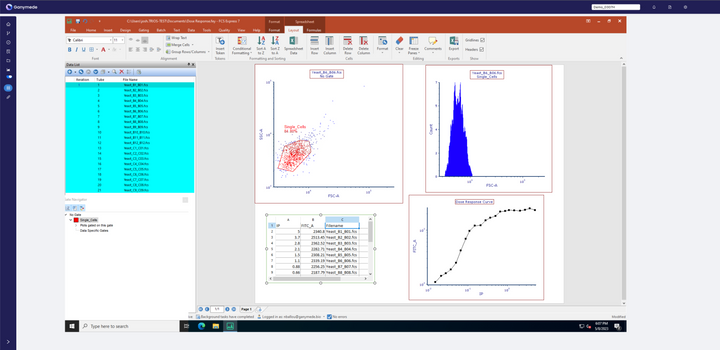

- Live plate reader experiment [18:00]

- Demonstrating our Universal Connectors in action [24:15]

- Looking behind the curtain to see how the Universal Connectors are customized and configurable [29:55]

- The role that instrument connectors have within the broader scientific data cloud approach [33:00]

- The "right" digitalization tech stack by therapeutic development stage [38:55]

- Preventing data pain with data automation [44:50]